Project - SemanticInformationRetrieval (SIR)

Jump to navigation

Jump to search

Notes:

- This project is suggested based on a discussion held at MWStake's August 2017 monthly meeting (see line 113 on https://etherpad.wikimedia.org/p/mwstake-2017-08).

- It is the subject of a workshop at the upcoming SMWCon Fall 2017: SMWCon_Fall_2017/Taking the first step towards a Semantic Information Retrieval System (SIRS).

- This is a working page representing consolidated current views. It is therefore subject to frequent changes.

- Preliminary non-coherent prototype code snippets can be found at https://github.com/MWStake/SemanticInformationRetrieval. They were extracted from my experimental updated-upon-save/API-edit and structured-data-oriented Keyword Search.

Preamble

This endeavor shall heed the following maxims:

Take the time to go fast. Good architecture maximizes the number of decisions not made. Write the code you wish you had.

Example

- Project page: https://dataspects.com/dataspects-search

- Development version: https://dataspects.com/search

Intention/Goal/Purpose/Fundamentals (Current Thoughts)

Relevant information retrieval represents the primary human-computer interface towards our customers' end users. It is the ultimate end of our guild's service provision loop between knowledge workers and knowledge consumers.

SemanticInformationRetrieval (SIR) aims at providing a single Google-like search text box (with subsequent facetted interactive search) covering all of an organization's structured and unstructered knowledge resources for access with the least amount of mental overhead.

Based on this mission declaration, SIR shall consider the following idiosyncrasies and features:

- SIR provides its DSL one abstraction level above MediaWiki's API, as from an organization's perspective, SMW is one component of its comprehensive knowledge management system (albeit it might be its central component).

- SIR provides versatile polymorphic search interfaces for full-text search as well as (subsequent) facetted interactive search along subject affinities paths through semanticized information (EPPO-style knowledge topics).

- SIR does represent several designated MediaWiki extensions:

- Extension:SIRIndexer providing a DSL for mapping and indexing both a page's factorized information in template instances as well as its free text.

- Extension:SIRUserInterface providing an API for search interfaces on special pages and by parser functions.

- Extension:SIRBackend providing fast "ES cached" page wikitext HTML delivery.

- Such search interfaces shall be easily configured for covering any arbitrary facet of any complexity at the outset, i.e. like "searching in a namespace" but extended to "searching in a facet".

- Truly relevant full-text search cannot be achieved without tuning the analysis process both at index (defining features) and query (extracting signals) time. That's why SIR provides DSL methods that

- e.g. in the case of Elasticsearch, take raw JSON as an input, and

- are sufficiently high-level/concrete, so that improving search relevance for a customer's SMW becomes straightforward enough without low-level coding — e.g. adding and composing document fields (SIR DSL) and their analysis (e.g. Elasticsearch JSON input).

- SIR can be morphed into (S)MW's single source of truth, i.e. replacing its SQL backend.

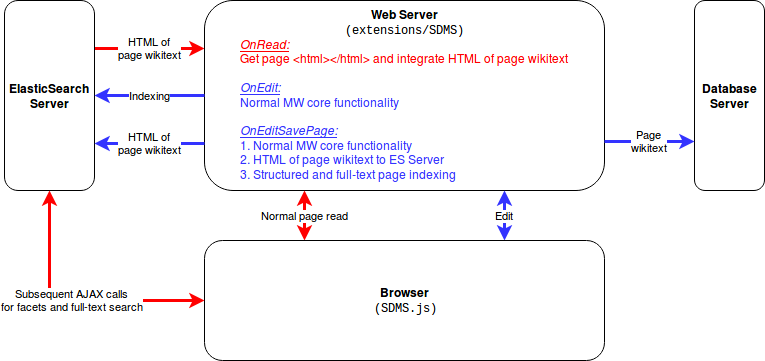

Architectural Ideas (work in progress)

- When delivering a page, the web server assembles the response by normal MW functionality (caching!) but keeps a placeholder where the page's wikitext HTML would normally go. (https://www.mediawiki.org/wiki/Manual:Article.php ::view, would https://www.mediawiki.org/wiki/Manual:Hooks/ArticleFromTitle be an appropriate hook?)

- The page's HTML is obtained from the ElasticSearch server.

- Extension:SDMS has a configuration setting to override normal MW page delivery functionality accordingly.

- When delivering a page in edit mode, no normal MW functionality is changed.

- When receiving an edited page:

- Execute normal MW page store procedure.

- Update corresponding ElasticSearch page document with:

- Non-analyzed raw HTML by parsing page wikitext into HTML

- Full-text analyzed HTML

- Structured page data by analyzing template calls (direct annotations are ignored as anti-best practice).

- When receiving an edited page: